Build custom AI agents

deployed instantly to the web

main.tsx

RunSign up

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

import { ObserverClient, ObserverHandler } from "./observer.ts";

import { getErrorText, makeHeaders, makeUrl } from "./utils.ts";

const MODEL = "gpt-realtime";

const TRANSCRIBE_MODEL = "gpt-4o-transcribe";

const NR_TYPE = "near_field";

const INSTRUCTIONS = `

Greet the user in English, and thank them for trying the new OpenAI Realtime API.

Give them a brief summary based on the list below, and then ask if they have any questions.

Answer questions using the information below. For questions outside this scope,

apologize and say you can't help with that.

When the user says goodbye or you think the call is over, say a brief goodbye and then

invoke the end_call function.

---

Short summary:

- The Realtime API is now generally available, and has improved instruction following, voice naturalness, and audio quality.

There are two new voices and it's easier to develop for. There's also a new telephony integration for phone scenarios.

Full list of improvements:

- generally available (no longer in beta)

- two new voices (Cedar and Marin)

- improved instruction following

- enhanced voice naturalness

- higher audio quality

- improved handling of alphanumerics (eg, properly understanding credit card and phone numbers)

- support for the OpenAI Prompts API

- support for MCP-based tools

- auto-truncation to reduce context size

- native telephony support, making it simple to connect voice calls to existing Realtime API applications

- when using WebRTC, you can connect without needing an ephemeral token

- when using WebRTC, you can now control sessions (including tool calls) from the server

- when using WebRTC, you can now send video to the model, and it can respond based on what it sees

- many helpful bugfixes!

`;

const VOICE = "marin";

const HANGUP_TOOL = {

type: "function",

name: "end_call",

description: `

Use this function to hang up the call when the user says goodbye

or otherwise indicates they are about to end the call.`,

};

// Builds the declarative session configuration for a Realtime API session.

export function makeSession() {

return {

type: "realtime",

model: MODEL,

instructions: INSTRUCTIONS,

audio: {

input: {

noise_reduction: { type: NR_TYPE },

transcription: { model: TRANSCRIBE_MODEL },

},

output: { voice: VOICE },

},

tools: [HANGUP_TOOL],

};

}

// AgentHandler implements the agent runtime behavior (tool calling, etc).

class AgentHandler implements ObserverHandler {

private audioActive = false;

private callEnding = false;

constructor(private client: ObserverClient, private callId: string) {}

async onInit() {

// Trigger a response immediately, with a slight delay to prevent clipping.

setTimeout(() => this.client.createResponse(), 250);

}

async onTool(name: string, _args: any) {

// If audio is playing, defer the hangup until it finishes.

// Otherwise, end the call now.

if (name == HANGUP_TOOL.name) {

if (this.audioActive) {

this.callEnding = true;

} else {

await this.hangup();

}

}

}

async onOutputAudioStarted() {

this.audioActive = true;

}

async onOutputAudioStopped() {

// Shut down the call and websocket once audio has finished spooling.

this.audioActive = false;

if (this.callEnding) {

await this.hangup();

}

}

async onOutputAudioCleared() {

this.audioActive = false;

}

private async hangup() {

const url = makeUrl(this.callId, "hangup");

const resp = await fetch(url, { method: "POST", headers: makeHeaders() });

if (!resp.ok) {

console.error(`🔴 end call failed: ${await getErrorText(resp)}`);

return;

}

console.log("✅ call ended");

}

}

// Creates the runtime handler for the session.

export function createHandler(client: ObserverClient, callId: string) {

return new AgentHandler(client, callId);

}The quickest way to take your AI agent from 0 to 1

main.tsx

RunSign up

1

2

3

import { OpenAI } from "https://esm.town/v/std/openai";

const openai = new OpenAI();Choose your model

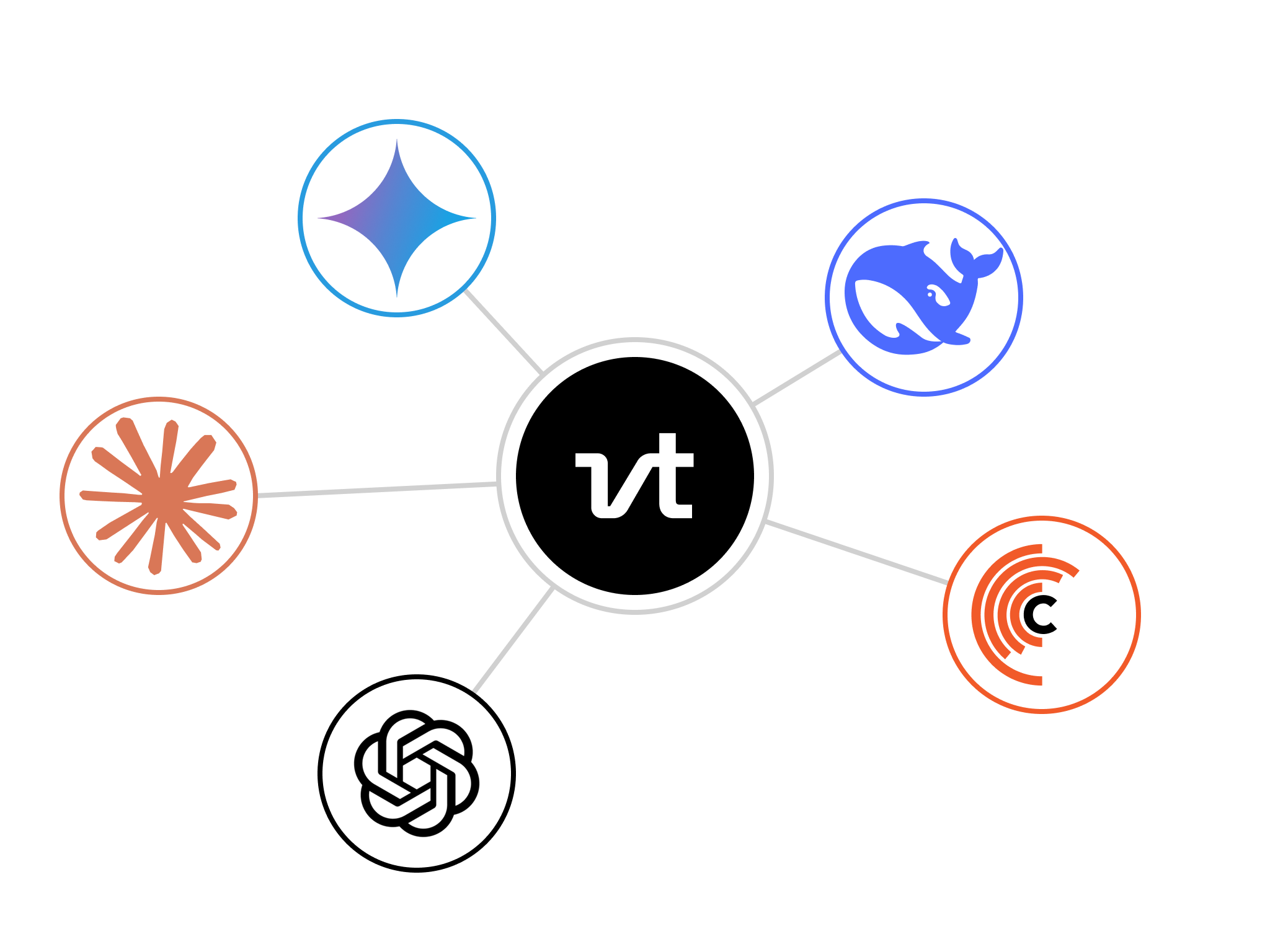

Built-in OpenAI access, as well as compatibility with AI SDKs from Anthropic, Vercel, and many others.

Run jobs on a schedule

Agentic behavior is a breeze with both explicit triggers and the ability to run on a schedule you define. Perfect for daily summaries, alerts, or generation tasks.

main.tsx

RunSign up

1

2

3

4

5

6

7

8

9

10

export default async function () {

const data = await fetch(ENDPOINT);

const messages = formatMessage({ data });

const res = await openai.chat.completions.parse({

model: MODEL,

messages,

});

}

main.tsx

RunSign up

1

2

3

4

5

6

7

8

9

10

11

import { email } from "https://esm.town/v/std/email";

export default async function handleEmail (email: Email) {

const result = await parseEmail(email);

await email({

subject: `Email summary from: ${email.from} `,

text: result,

});

}

Send and respond to emails

Your agent can communicate: easily create a val that you can forward repetitious emails to, and it'll act on only the ones that are important.

Instant deploys

The feedback loop matters more than ever: fast deploys mean quicker iteration cycles when you're running a self-improving program.

⌘S

Start with a template

Open AI Realtime API

OpenAI Realtime app that supports both WebRTC and SIP (telephone) users

MCP server

Demo MCP server to add numbers. Built with Hono MCP and the official MCP TypeScript SDK

Personal assistant

Your personal digital butler, ever vigilant and ever helpful in managing the complexities of your digital life

⌘S

Instant deploys

Run live code on the web as fast as you can hit ⌘S

Cron jobs

Schedule any function in one click

12:34

AI pair programmer

Edit code and deploy instantly with agentic AI

const foo = await bar()

YAML

Zero config devops

Deploy to fast, scalable infrastructure in seconds

Build your own AI agent today

Try Val Town for free